It’s no surprise that AI doesn’t always get things right. Occasionally, it even hallucinates. However, a recent study by Apple researchers has shown even more significant flaws within the mathematical models used by AI for formal reasoning.

? Remove AdsAs part of the study, Apple scientists asked an AI Large Language Model (LLM) a question, multiple times, in slightly varying ways, and were astounded when they found the LLM offered unexpected variations in the answers. These variations were most prominent when numbers were involved.

Apple's Study Suggests Big Problems With AI's Reliability

The research, published by arxiv.org, concluded there was “significant performance variability across different instantiations of the same question, challenging the reliability of current GSM8K results that rely on single point accuracy metrics.” GSM8K is a dataset which includes over 8000 diverse grade-school math questions and answers.

? Remove AdsApple researchers identified the variance in this performance could be as much as 10%. And even slight variations in prompts can cause colossal problems with the reliability of the LLM’s answers.

In other words, you might want to fact-check your answers anytime you use something like ChatGPT. That's because, while it may sometimes look like AI is using logic to give you answers to your inquiries, logic isn’t what’s being used.

AI, instead, relies on pattern recognition to provide responses to prompts. However, the Apple study shows how changing even a few unimportant words can alter that pattern recognition.

One example of the critical variance presented came about through a problem regarding collecting kiwis over several days. Apple researchers conducted a control experiment, then added some inconsequential information about kiwi size.

? Remove AdsBoth Meta and OpenAI Models Showed Issues

Meta’s Llama, and OpenAI’s o1, then altered their answers to the problem from the control despite kiwi size data having no tangible influence on the problem’s outcome. OpenAI’s GPT-4o also had issues with its performance when introducing tiny variations in the data given to the LLM.

Since LLMs are becoming more prominent in our culture, this news raises a tremendous concern about whether we can trust AI to provide accurate answers to our inquiries. Especially for issues like financial advice. It also reinforces the need to accurately verify the information you receive when using large language models.

That means you'll want to do some critical thinking and due diligence instead of blindly relying on AI. Then again, if you're someone who uses AI regularly, you probably already knew that.

? Remove AdsThe above is the detailed content of A New Apple Study Shows AI Reasoning Has Critical Flaws. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undress AI Tool

Undress images for free

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

AI Investor Stuck At A Standstill? 3 Strategic Paths To Buy, Build, Or Partner With AI Vendors

Jul 02, 2025 am 11:13 AM

AI Investor Stuck At A Standstill? 3 Strategic Paths To Buy, Build, Or Partner With AI Vendors

Jul 02, 2025 am 11:13 AM

Investing is booming, but capital alone isn’t enough. With valuations rising and distinctiveness fading, investors in AI-focused venture funds must make a key decision: Buy, build, or partner to gain an edge? Here’s how to evaluate each option—and pr

AGI And AI Superintelligence Are Going To Sharply Hit The Human Ceiling Assumption Barrier

Jul 04, 2025 am 11:10 AM

AGI And AI Superintelligence Are Going To Sharply Hit The Human Ceiling Assumption Barrier

Jul 04, 2025 am 11:10 AM

Let’s talk about it. This analysis of an innovative AI breakthrough is part of my ongoing Forbes column coverage on the latest in AI, including identifying and explaining various impactful AI complexities (see the link here). Heading Toward AGI And

Build Your First LLM Application: A Beginner's Tutorial

Jun 24, 2025 am 10:13 AM

Build Your First LLM Application: A Beginner's Tutorial

Jun 24, 2025 am 10:13 AM

Have you ever tried to build your own Large Language Model (LLM) application? Ever wondered how people are making their own LLM application to increase their productivity? LLM applications have proven to be useful in every aspect

Kimi K2: The Most Powerful Open-Source Agentic Model

Jul 12, 2025 am 09:16 AM

Kimi K2: The Most Powerful Open-Source Agentic Model

Jul 12, 2025 am 09:16 AM

Remember the flood of open-source Chinese models that disrupted the GenAI industry earlier this year? While DeepSeek took most of the headlines, Kimi K1.5 was one of the prominent names in the list. And the model was quite cool.

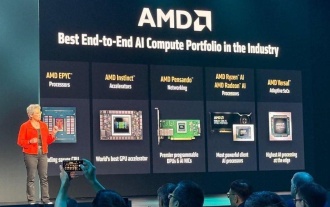

AMD Keeps Building Momentum In AI, With Plenty Of Work Still To Do

Jun 28, 2025 am 11:15 AM

AMD Keeps Building Momentum In AI, With Plenty Of Work Still To Do

Jun 28, 2025 am 11:15 AM

Overall, I think the event was important for showing how AMD is moving the ball down the field for customers and developers. Under Su, AMD’s M.O. is to have clear, ambitious plans and execute against them. Her “say/do” ratio is high. The company does

Future Forecasting A Massive Intelligence Explosion On The Path From AI To AGI

Jul 02, 2025 am 11:19 AM

Future Forecasting A Massive Intelligence Explosion On The Path From AI To AGI

Jul 02, 2025 am 11:19 AM

Let’s talk about it. This analysis of an innovative AI breakthrough is part of my ongoing Forbes column coverage on the latest in AI, including identifying and explaining various impactful AI complexities (see the link here). For those readers who h

Grok 4 vs Claude 4: Which is Better?

Jul 12, 2025 am 09:37 AM

Grok 4 vs Claude 4: Which is Better?

Jul 12, 2025 am 09:37 AM

By mid-2025, the AI “arms race” is heating up, and xAI and Anthropic have both released their flagship models, Grok 4 and Claude 4. These two models are at opposite ends of the design philosophy and deployment platform, yet they

Chain Of Thought For Reasoning Models Might Not Work Out Long-Term

Jul 02, 2025 am 11:18 AM

Chain Of Thought For Reasoning Models Might Not Work Out Long-Term

Jul 02, 2025 am 11:18 AM

For example, if you ask a model a question like: “what does (X) person do at (X) company?” you may see a reasoning chain that looks something like this, assuming the system knows how to retrieve the necessary information:Locating details about the co