Summary

- GPT-4, GPT-4 Turbo, and GPT-4o have varying costs, speeds, and features for different tasks.

- GPT-4o is the most powerful and accurate, but it is limited for free users; consider upgrading to ChatGPT Plus for more features.

- Choose the model based on task complexity and accuracy needs.

With the May 2024 release of GPT-4o to accompany GPT-4, you're probably wondering what the difference between these AI models is—and which ChatGPT model you should actually use.

Although OpenAI's GPT-4 models start from the same foundation, they have some considerable differences that mean they're better suited to some tasks than others, not to mention the cost associated with accessing them.

So, what's the difference between OpenAI's GPT-4 models?

GPT-4 vs. GPT-4o vs. GPT-4o mini

OpenAI's GPT-4 models include several variants, each designed to meet different needs. Here's an overview of the differences between GPT-4, GPT-4o (Omni), and GPT-4o mini.

GPT-4

GPT-4 is the foundational model. It understands and generates complex sentences and is useful for a broad range of applications, such as creative writing, data analysis, language translation, and code generation. With GPT-4's 23,000-25,000 word context window, you can also attach multiple long documents and have them answer any queries about your uploaded files. Since this is the base model for the series, you'll also be able to access all of GPT -4's useful features on both GPT-4 Turbo and GPT-4o.

GPT-4o mini

GPT-4o mini is a small language model (SLM) that competes well with many large language models (LLMs). Even though it's trained on smaller and more specific datasets, GPT-4o mini outperforms GPT-4 in some key areas, such as response speed and free user access.

However, while GPT-4o mini is designed to be a multimodal model, its current ChatGPT version only supports text, without the ability to use vision or audio. Additionally, unlike GPT-4 and GPT-4o, ChatGPT does not allow GPT-4o mini to attach files. It is still unclear whether ChatGPT will allow multimodal capabilities in GPT-4o mini in the future.

GPT-4o

GPT-4o ("o" for "omni") is the latest addition to the GPT-4 series of models and is the default model selected for both ChatGPT Free and Plus users. This model is smarter and four times faster than GPT-4, making it ideal for real-time applications. GPT-4o was the first multimodal model in the series, capable of analyzing all kinds of file formats such as text, audio, image, and video, and can generate text and images all within ChatGPT.

Additionally, OpenAI has allowed free tier users limited GPT-4o access, at 16 messages every 3 hours. After that, ChatGPT will revert to using GPT-3.5.

Here's a breakdown of each GPT-4 model:

| Feature | GPT-4 | GPT-4o | GPT-4o mini |

|---|---|---|---|

| Cost (ChatGPT) | $20 | Free (16 messages every 3 hours), $20 (80 messages every 3 hours) | Free (16 messages every 3 hours), $20 (80 messages every 3 hours) |

| Response Speed | Standard | 4X faster response than GPT-4 | 2X faster response than GPT-4o |

| Context Window | Up to 32k tokens | Up to 32k tokens | Up to 32k tokens |

| Multimodal Input/Output | No | Yes | Yes |

| MMLU | 86.3 | 88.7 | 82.0 |

| GPTQA | 48.0 | 53.6 | 40.2 |

| MATH | 42.5 | 76.6 | 70.2 |

| HumanEval | 67.0 | 90.2 | 87.2 |

In addition to costs, response times, and context window, I've also added the accuracy benchmark for each model to help compare accuracy in various tasks. The benchmark tests include MMLU for testing academic knowledge, GPQA for assessing general knowledge, HumanEval for assessing the models' ability to code, and MATH for solving math problems. In each, a higher score is better.

Which GPT-4 Model Should You Use?

Choosing the right model depends on your specific needs and the nature of the tasks you intend to perform.

GPT-4o is the most powerful model in the lineup. It has the highest accuracy scores in all benchmarked tests and will likely perform best in any interaction. However, the number of messages you can send GPT-4o is limited, especially for free-tier users. This limitation is a key reason why you should still upgrade to ChatGPT Plus.

Still, it is best to reserve the use of GPT-4o for interactions that require multimodal inputs and outputs or when the utmost accuracy is needed. Since GPT-4o mini performs better than GPT-4 in terms of mathematics, academic knowledge, coding, and general knowledge, this model should be used for text-based queries where higher accuracy is needed. Use the GPT-4 model for instances where attaching files such as documents, PDFs, and audio is required.

The above is the detailed content of GPT-4 vs. GPT-4o vs. GPT-4o Mini: What's the Difference?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undress AI Tool

Undress images for free

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1794

1794

16

16

1739

1739

56

56

1590

1590

29

29

1468

1468

72

72

267

267

587

587

AI Investor Stuck At A Standstill? 3 Strategic Paths To Buy, Build, Or Partner With AI Vendors

Jul 02, 2025 am 11:13 AM

AI Investor Stuck At A Standstill? 3 Strategic Paths To Buy, Build, Or Partner With AI Vendors

Jul 02, 2025 am 11:13 AM

Investing is booming, but capital alone isn’t enough. With valuations rising and distinctiveness fading, investors in AI-focused venture funds must make a key decision: Buy, build, or partner to gain an edge? Here’s how to evaluate each option—and pr

AGI And AI Superintelligence Are Going To Sharply Hit The Human Ceiling Assumption Barrier

Jul 04, 2025 am 11:10 AM

AGI And AI Superintelligence Are Going To Sharply Hit The Human Ceiling Assumption Barrier

Jul 04, 2025 am 11:10 AM

Let’s talk about it. This analysis of an innovative AI breakthrough is part of my ongoing Forbes column coverage on the latest in AI, including identifying and explaining various impactful AI complexities (see the link here). Heading Toward AGI And

Kimi K2: The Most Powerful Open-Source Agentic Model

Jul 12, 2025 am 09:16 AM

Kimi K2: The Most Powerful Open-Source Agentic Model

Jul 12, 2025 am 09:16 AM

Remember the flood of open-source Chinese models that disrupted the GenAI industry earlier this year? While DeepSeek took most of the headlines, Kimi K1.5 was one of the prominent names in the list. And the model was quite cool.

Grok 4 vs Claude 4: Which is Better?

Jul 12, 2025 am 09:37 AM

Grok 4 vs Claude 4: Which is Better?

Jul 12, 2025 am 09:37 AM

By mid-2025, the AI “arms race” is heating up, and xAI and Anthropic have both released their flagship models, Grok 4 and Claude 4. These two models are at opposite ends of the design philosophy and deployment platform, yet they

Future Forecasting A Massive Intelligence Explosion On The Path From AI To AGI

Jul 02, 2025 am 11:19 AM

Future Forecasting A Massive Intelligence Explosion On The Path From AI To AGI

Jul 02, 2025 am 11:19 AM

Let’s talk about it. This analysis of an innovative AI breakthrough is part of my ongoing Forbes column coverage on the latest in AI, including identifying and explaining various impactful AI complexities (see the link here). For those readers who h

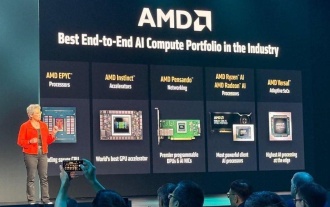

AMD Keeps Building Momentum In AI, With Plenty Of Work Still To Do

Jun 28, 2025 am 11:15 AM

AMD Keeps Building Momentum In AI, With Plenty Of Work Still To Do

Jun 28, 2025 am 11:15 AM

Overall, I think the event was important for showing how AMD is moving the ball down the field for customers and developers. Under Su, AMD’s M.O. is to have clear, ambitious plans and execute against them. Her “say/do” ratio is high. The company does

Chain Of Thought For Reasoning Models Might Not Work Out Long-Term

Jul 02, 2025 am 11:18 AM

Chain Of Thought For Reasoning Models Might Not Work Out Long-Term

Jul 02, 2025 am 11:18 AM

For example, if you ask a model a question like: “what does (X) person do at (X) company?” you may see a reasoning chain that looks something like this, assuming the system knows how to retrieve the necessary information:Locating details about the co

Batch Processing vs Mini-Batch Training in Deep Learning

Jun 30, 2025 am 09:46 AM

Batch Processing vs Mini-Batch Training in Deep Learning

Jun 30, 2025 am 09:46 AM

Deep learning has revolutionised the AI field by allowing machines to grasp more in-depth information within our data. Deep learning has been able to do this by replicating how our brain functions through the logic of neuron syna