Technology peripherals

Technology peripherals

AI

AI

Understanding Prompt Tuning: Enhance Your Language Models with Precision

Understanding Prompt Tuning: Enhance Your Language Models with Precision

Understanding Prompt Tuning: Enhance Your Language Models with Precision

Mar 06, 2025 pm 12:21 PMPrompt Tuning: A Parameter-Efficient Approach to Enhancing Large Language Models

In the rapidly advancing field of large language models (LLMs), techniques like prompt tuning are crucial for maintaining a competitive edge. This method enhances pre-trained models' performance without the substantial computational overhead of traditional training. This article explores prompt tuning's fundamentals, compares it to fine-tuning and prompt engineering, and provides a practical example using Hugging Face and the bloomz-560m model.

What is Prompt Tuning?

Prompt tuning improves a pre-trained LLM's performance without altering its core architecture. Instead of modifying the model's internal weights, it adjusts the prompts guiding the model's responses. This involves "soft prompts"—tunable parameters inserted at the input's beginning.

Image source

The illustration contrasts traditional model tuning with prompt tuning. Traditional methods require a separate model for each task, while prompt tuning uses a single foundational model across multiple tasks, adjusting task-specific prompts.

How Prompt Tuning Works:

-

Soft Prompt Initialization: Artificially created tokens are added to the input sequence. These can be initialized randomly or using heuristics.

-

Forward Pass and Loss Evaluation: The model processes the combined input (soft prompt actual input), and the output is compared to the expected outcome using a loss function.

-

Backpropagation: Errors are backpropagated, but only the soft prompt parameters are adjusted, not the model's weights.

-

Iteration: This forward pass, loss evaluation, and backpropagation cycle repeats across multiple epochs, refining the soft prompts to minimize errors.

Prompt Tuning vs. Fine-Tuning vs. Prompt Engineering

Prompt tuning, fine-tuning, and prompt engineering are distinct approaches to improving LLM performance:

-

Fine-tuning: Resource-intensive, requiring complete model retraining on a task-specific dataset. This optimizes the model's weights for detailed data nuances but demands significant computational resources and risks overfitting.

-

Prompt tuning: Adjusts "soft prompts" integrated into the input processing, modifying how the model interprets prompts without altering its weights. It offers a balance between performance improvement and resource efficiency.

-

Prompt engineering: No training is involved; it relies solely on crafting effective prompts, leveraging the model's inherent knowledge. This requires deep understanding of the model and no computational resources.

| Method | Resource Intensity | Training Required | Best For |

|---|---|---|---|

| Fine-Tuning | High | Yes | Deep model customization |

| Prompt Tuning | Low | Yes | Maintaining model integrity across multiple tasks |

| Prompt Engineering | None | No | Quick adaptations without computational cost |

Benefits of Prompt Tuning

Prompt tuning offers several advantages:

-

Resource Efficiency: Minimal computational resources are needed due to unchanged model parameters.

-

Rapid Deployment: Faster adaptation to different tasks due to adjustments limited to soft prompts.

-

Model Integrity: Preserves the pre-trained model's capabilities and knowledge.

-

Task Flexibility: A single foundational model can handle multiple tasks by changing soft prompts.

-

Reduced Human Involvement: Automated soft prompt optimization minimizes human error.

-

Comparable Performance: Research shows prompt tuning can achieve performance similar to fine-tuning, especially with large models.

A Step-by-Step Approach to Prompt Tuning (using Hugging Face and bloomz-560m)

This section provides a simplified overview of the process, focusing on key steps and concepts.

-

Loading Model and Tokenizer: Load the bloomz-560m model and tokenizer from Hugging Face. (Code omitted for brevity, refer to the original for details).

-

Initial Inference: Run inference with the untuned model to establish a baseline. (Code omitted).

-

Dataset Preparation: Use a suitable dataset (e.g.,

awesome-chatgpt-prompts) and tokenize it. (Code omitted). -

Tuning Configuration and Training: Configure prompt tuning using

PromptTuningConfigandTrainingArgumentsfrom the PEFT library. Train the model using aTrainerobject. (Code omitted). -

Inference with Tuned Model: Run inference with the tuned model and compare the results to the baseline. (Code omitted).

Conclusion

Prompt tuning is a valuable technique for efficiently enhancing LLMs. Its resource efficiency, rapid deployment, and preservation of model integrity make it a powerful tool for various applications. Further exploration of resources on fine-tuning, prompt engineering, and advanced LLM techniques is encouraged.

The above is the detailed content of Understanding Prompt Tuning: Enhance Your Language Models with Precision. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undress AI Tool

Undress images for free

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1794

1794

16

16

1739

1739

56

56

1590

1590

29

29

1468

1468

72

72

267

267

587

587

AI Investor Stuck At A Standstill? 3 Strategic Paths To Buy, Build, Or Partner With AI Vendors

Jul 02, 2025 am 11:13 AM

AI Investor Stuck At A Standstill? 3 Strategic Paths To Buy, Build, Or Partner With AI Vendors

Jul 02, 2025 am 11:13 AM

Investing is booming, but capital alone isn’t enough. With valuations rising and distinctiveness fading, investors in AI-focused venture funds must make a key decision: Buy, build, or partner to gain an edge? Here’s how to evaluate each option—and pr

AGI And AI Superintelligence Are Going To Sharply Hit The Human Ceiling Assumption Barrier

Jul 04, 2025 am 11:10 AM

AGI And AI Superintelligence Are Going To Sharply Hit The Human Ceiling Assumption Barrier

Jul 04, 2025 am 11:10 AM

Let’s talk about it. This analysis of an innovative AI breakthrough is part of my ongoing Forbes column coverage on the latest in AI, including identifying and explaining various impactful AI complexities (see the link here). Heading Toward AGI And

Kimi K2: The Most Powerful Open-Source Agentic Model

Jul 12, 2025 am 09:16 AM

Kimi K2: The Most Powerful Open-Source Agentic Model

Jul 12, 2025 am 09:16 AM

Remember the flood of open-source Chinese models that disrupted the GenAI industry earlier this year? While DeepSeek took most of the headlines, Kimi K1.5 was one of the prominent names in the list. And the model was quite cool.

Future Forecasting A Massive Intelligence Explosion On The Path From AI To AGI

Jul 02, 2025 am 11:19 AM

Future Forecasting A Massive Intelligence Explosion On The Path From AI To AGI

Jul 02, 2025 am 11:19 AM

Let’s talk about it. This analysis of an innovative AI breakthrough is part of my ongoing Forbes column coverage on the latest in AI, including identifying and explaining various impactful AI complexities (see the link here). For those readers who h

Grok 4 vs Claude 4: Which is Better?

Jul 12, 2025 am 09:37 AM

Grok 4 vs Claude 4: Which is Better?

Jul 12, 2025 am 09:37 AM

By mid-2025, the AI “arms race” is heating up, and xAI and Anthropic have both released their flagship models, Grok 4 and Claude 4. These two models are at opposite ends of the design philosophy and deployment platform, yet they

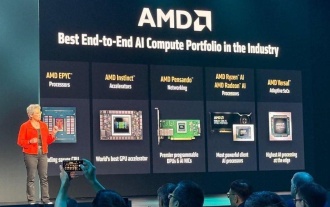

AMD Keeps Building Momentum In AI, With Plenty Of Work Still To Do

Jun 28, 2025 am 11:15 AM

AMD Keeps Building Momentum In AI, With Plenty Of Work Still To Do

Jun 28, 2025 am 11:15 AM

Overall, I think the event was important for showing how AMD is moving the ball down the field for customers and developers. Under Su, AMD’s M.O. is to have clear, ambitious plans and execute against them. Her “say/do” ratio is high. The company does

Chain Of Thought For Reasoning Models Might Not Work Out Long-Term

Jul 02, 2025 am 11:18 AM

Chain Of Thought For Reasoning Models Might Not Work Out Long-Term

Jul 02, 2025 am 11:18 AM

For example, if you ask a model a question like: “what does (X) person do at (X) company?” you may see a reasoning chain that looks something like this, assuming the system knows how to retrieve the necessary information:Locating details about the co

AI Will Blackmail, Snitch, Even Kill For Its Hidden Agendas

Jun 26, 2025 am 10:36 AM

AI Will Blackmail, Snitch, Even Kill For Its Hidden Agendas

Jun 26, 2025 am 10:36 AM

Threats associated with AI use are rising in both volume and severity, as this new-age technology touches more and more aspects of human lives. A new report now warns of another impending danger associated with the wide-scale use