Build a Multimodal Agent for Product Ingredient Analysis

Mar 09, 2025 am 11:28 AMUnlock the Secrets of Product Ingredients with a Multimodal AI Agent! Tired of deciphering complex ingredient lists? This article shows you how to build a powerful Product Ingredient Analyzer using Gemini 2.0, Phidata, and Tavily Web Search. Say goodbye to time-consuming individual ingredient searches and hello to instant, actionable insights!

Key Learning Outcomes

This tutorial will guide you through:

- Designing a multimodal AI agent architecture leveraging Phidata and Gemini 2.0 for vision-language tasks.

- Integrating Tavily Web Search for enhanced context and information retrieval within your agent workflow.

- Building a Product Ingredient Analyzer Agent that expertly combines image processing and web search for detailed product analysis.

- Mastering the art of crafting effective system prompts and instructions to optimize agent performance in multimodal scenarios.

- Developing a user-friendly Streamlit UI for real-time image analysis, nutritional information, and personalized health recommendations.

This article is part of the Data Science Blogathon.

Table of Contents

- Understanding Multimodal Systems

- Real-World Multimodal Applications

- The Power of Multimodal Agents

- Constructing Your Product Ingredient Analyzer Agent

- Essential Links

- Conclusion

- Frequently Asked Questions

Understanding Multimodal Systems

Multimodal systems are designed to process and interpret diverse data types simultaneously – including text, images, audio, and video. Vision-language models like Gemini 2.0 Flash, GPT-4o, Claude Sonnet 3.5, and Pixtral-12B excel at recognizing the intricate relationships between these modalities, extracting valuable knowledge from complex inputs. This article focuses on vision-language models that analyze images and generate textual explanations. These systems seamlessly blend computer vision and natural language processing to interpret visual information based on user prompts.

Real-World Multimodal Applications

Multimodal systems are revolutionizing various industries:

- Finance: Instantly understand complex financial terms by simply taking a screenshot.

- E-commerce: Obtain detailed ingredient analysis and health insights by photographing product labels.

- Education: Gain simplified explanations of complex diagrams and concepts from textbooks.

- Healthcare: Receive clear explanations of medical reports and prescription labels.

The Power of Multimodal Agents

The shift towards multimodal agents represents a significant advancement in AI interaction. Here's why they're so effective:

- Simultaneous processing of visual and textual data leads to more precise and context-rich responses.

- Complex information is simplified, making it easily accessible to a wider audience.

- Users upload a single image for comprehensive analysis, eliminating the need for manual ingredient searches.

- Combining web search and image analysis delivers more complete and reliable insights.

Constructing Your Product Ingredient Analyzer Agent

Let's build the Product Ingredient Analysis Agent step-by-step:

Step 1: Setting Up Dependencies

We'll need:

- Gemini 2.0 Flash: For powerful multimodal processing.

- Tavily Search: For seamless web search integration.

- Phidata: To orchestrate the agent system and manage workflows.

- Streamlit: To create a user-friendly web application.

!pip install phidata google-generativeai tavily-python streamlit pillow

Step 2: API Setup and Configuration

Obtain API keys from:

- Gemini API key: http://m.miracleart.cn/link/feac4a1c91eb74bfce13cb7c052c233b

- Tavily API key: http://m.miracleart.cn/link/c73ff6dceadedf3652d678cd790ff167

from phi.agent import Agent from phi.model.google import Gemini # needs a api key from phi.tools.tavily import TavilyTools # also needs a api key import os TAVILY_API_KEY = "<replace-your-api-key>" GOOGLE_API_KEY = "<replace-your-api-key>" os.environ['TAVILY_API_KEY'] = TAVILY_API_KEY os.environ['GOOGLE_API_KEY'] = GOOGLE_API_KEY</replace-your-api-key></replace-your-api-key>

Step 3: System Prompt and Instructions

Clear instructions are crucial for optimal LLM performance. We'll define the agent's role and responsibilities:

SYSTEM_PROMPT = """ You are an expert Food Product Analyst specialized in ingredient analysis and nutrition science. Your role is to analyze product ingredients, provide health insights, and identify potential concerns by combining ingredient analysis with scientific research. You utilize your nutritional knowledge and research works to provide evidence-based insights, making complex ingredient information accessible and actionable for users. Return your response in Markdown format. """ INSTRUCTIONS = """ * Read ingredient list from product image * Remember the user may not be educated about the product, break it down in simple words like explaining to 10 year kid * Identify artificial additives and preservatives * Check against major dietary restrictions (vegan, halal, kosher). Include this in response. * Rate nutritional value on scale of 1-5 * Highlight key health implications or concerns * Suggest healthier alternatives if needed * Provide brief evidence-based recommendations * Use Search tool for getting context """

Step 4: Defining the Agent Object

The Phidata Agent is configured to process markdown and operate based on the system prompt and instructions. Gemini 2.0 Flash is used as the reasoning model, and Tavily Search is integrated for efficient web search.

agent = Agent(

model = Gemini(),

tools = [TavilyTools()],

markdown=True,

system_prompt = SYSTEM_PROMPT,

instructions = INSTRUCTIONS

)

Step 5: Multimodal Image Processing

Provide the image path or URL, along with a prompt, to initiate analysis. Examples using both approaches are provided in the original article.

Step 6 & 7: Streamlit Web App Development (Detailed code in original article)

A Streamlit application is created to provide a user-friendly interface for image upload, analysis, and result display. The app includes tabs for example products, image uploads, and live photo capture. Image resizing and caching are implemented for optimal performance.

Essential Links

- Full code: [Insert GitHub link here]

- Deployed App: [Insert deployed app link here]

Conclusion

Multimodal AI agents are transforming how we interact with and understand complex information. The Product Ingredient Analyzer demonstrates the power of combining vision, language, and web search to provide accessible, actionable insights.

Frequently Asked Questions

- Q1. Open-Source Multimodal Vision-Language Models: LLaVA, Pixtral-12B, Multimodal-GPT, NVILA, and Qwen are examples.

- Q2. Is Llama 3 Multimodal?: Yes, Llama 3 and Llama 3.2 Vision models are multimodal.

- Q3. Multimodal LLM vs. Multimodal Agent: An LLM processes multimodal data; an agent uses LLMs and other tools to perform tasks and make decisions based on multimodal inputs.

Remember to replace the placeholders with your actual API keys. The complete code and deployed app links should be added for a complete and functional guide.

The above is the detailed content of Build a Multimodal Agent for Product Ingredient Analysis. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undress AI Tool

Undress images for free

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1794

1794

16

16

1739

1739

56

56

1590

1590

29

29

1467

1467

72

72

267

267

587

587

AI Investor Stuck At A Standstill? 3 Strategic Paths To Buy, Build, Or Partner With AI Vendors

Jul 02, 2025 am 11:13 AM

AI Investor Stuck At A Standstill? 3 Strategic Paths To Buy, Build, Or Partner With AI Vendors

Jul 02, 2025 am 11:13 AM

Investing is booming, but capital alone isn’t enough. With valuations rising and distinctiveness fading, investors in AI-focused venture funds must make a key decision: Buy, build, or partner to gain an edge? Here’s how to evaluate each option—and pr

AGI And AI Superintelligence Are Going To Sharply Hit The Human Ceiling Assumption Barrier

Jul 04, 2025 am 11:10 AM

AGI And AI Superintelligence Are Going To Sharply Hit The Human Ceiling Assumption Barrier

Jul 04, 2025 am 11:10 AM

Let’s talk about it. This analysis of an innovative AI breakthrough is part of my ongoing Forbes column coverage on the latest in AI, including identifying and explaining various impactful AI complexities (see the link here). Heading Toward AGI And

Kimi K2: The Most Powerful Open-Source Agentic Model

Jul 12, 2025 am 09:16 AM

Kimi K2: The Most Powerful Open-Source Agentic Model

Jul 12, 2025 am 09:16 AM

Remember the flood of open-source Chinese models that disrupted the GenAI industry earlier this year? While DeepSeek took most of the headlines, Kimi K1.5 was one of the prominent names in the list. And the model was quite cool.

Future Forecasting A Massive Intelligence Explosion On The Path From AI To AGI

Jul 02, 2025 am 11:19 AM

Future Forecasting A Massive Intelligence Explosion On The Path From AI To AGI

Jul 02, 2025 am 11:19 AM

Let’s talk about it. This analysis of an innovative AI breakthrough is part of my ongoing Forbes column coverage on the latest in AI, including identifying and explaining various impactful AI complexities (see the link here). For those readers who h

Grok 4 vs Claude 4: Which is Better?

Jul 12, 2025 am 09:37 AM

Grok 4 vs Claude 4: Which is Better?

Jul 12, 2025 am 09:37 AM

By mid-2025, the AI “arms race” is heating up, and xAI and Anthropic have both released their flagship models, Grok 4 and Claude 4. These two models are at opposite ends of the design philosophy and deployment platform, yet they

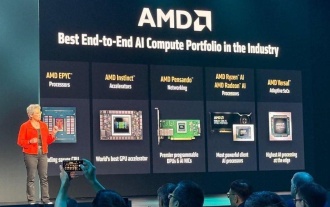

AMD Keeps Building Momentum In AI, With Plenty Of Work Still To Do

Jun 28, 2025 am 11:15 AM

AMD Keeps Building Momentum In AI, With Plenty Of Work Still To Do

Jun 28, 2025 am 11:15 AM

Overall, I think the event was important for showing how AMD is moving the ball down the field for customers and developers. Under Su, AMD’s M.O. is to have clear, ambitious plans and execute against them. Her “say/do” ratio is high. The company does

Chain Of Thought For Reasoning Models Might Not Work Out Long-Term

Jul 02, 2025 am 11:18 AM

Chain Of Thought For Reasoning Models Might Not Work Out Long-Term

Jul 02, 2025 am 11:18 AM

For example, if you ask a model a question like: “what does (X) person do at (X) company?” you may see a reasoning chain that looks something like this, assuming the system knows how to retrieve the necessary information:Locating details about the co

AI Will Blackmail, Snitch, Even Kill For Its Hidden Agendas

Jun 26, 2025 am 10:36 AM

AI Will Blackmail, Snitch, Even Kill For Its Hidden Agendas

Jun 26, 2025 am 10:36 AM

Threats associated with AI use are rising in both volume and severity, as this new-age technology touches more and more aspects of human lives. A new report now warns of another impending danger associated with the wide-scale use