Unlocking AI Efficiency: A Deep Dive into Mixture of Experts (MoE) Models and OLMoE

Training large language models (LLMs) demands significant computational resources, posing a challenge for organizations seeking cost-effective AI solutions. The Mixture of Experts (MoE) technique offers a powerful, efficient alternative. By dividing a large model into smaller, specialized sub-models ("experts"), MoE optimizes resource utilization and makes advanced AI more accessible.

This article explores MoE models, focusing on the open-source OLMoE, its architecture, training, performance, and practical application using Ollama on Google Colab.

Key Learning Objectives:

- Grasp the concept and importance of MoE models in optimizing AI computational costs.

- Understand the architecture of MoE models, including experts and router networks.

- Learn about OLMoE's unique features, training methods, and performance benchmarks.

- Gain practical experience running OLMoE on Google Colab with Ollama.

- Explore the efficiency of sparse model architectures like OLMoE in various AI applications.

The Need for Mixture of Experts Models:

Traditional deep learning models, even sophisticated ones like transformers, often utilize the entire network for every input. This "dense" approach is computationally expensive. MoE models address this by employing a sparse architecture, activating only the most relevant experts for each input, significantly reducing resource consumption.

How Mixture of Experts Models Function:

MoE models operate similarly to a team tackling a complex project. Each "expert" specializes in a specific sub-task. A "router" or "gating network" intelligently directs inputs to the most appropriate experts, ensuring efficient task allocation and improved accuracy.

Core Components of MoE:

- Experts: These are smaller neural networks, each trained to handle specific aspects of a problem. Only a subset of experts is activated for any given input.

- Router/Gate Network: This component acts as a task manager, selecting the optimal experts based on the input data. Common routing algorithms include top-k routing and expert choice routing.

Delving into the OLMoE Model:

OLMoE, a fully open-source MoE language model, stands out for its efficiency. It features a sparse architecture, activating only a small fraction of its total parameters for each input. OLMoE comes in two versions:

- OLMoE-1B-7B: 7 billion parameters total, with 1 billion activated per token.

- OLMoE-1B-7B-INSTRUCT: Fine-tuned for improved performance on specific tasks.

OLMoE's architecture incorporates 64 experts, activating only eight at a time, maximizing efficiency.

OLMoE Training Methodology:

Trained on a massive dataset of 5 trillion tokens, OLMoE utilizes techniques like auxiliary losses and load balancing to ensure efficient resource utilization and model stability. The use of router z-losses further refines expert selection.

Performance of OLMoE-1b-7B:

Benchmarking against leading models like Llama2-13B and DeepSeekMoE-16B demonstrates OLMoE's superior performance and efficiency across various NLP tasks (MMLU, GSM8k, HumanEval).

Running OLMoE on Google Colab with Ollama:

Ollama simplifies the deployment and execution of LLMs. The following steps outline how to run OLMoE on Google Colab using Ollama:

-

Install necessary libraries:

!sudo apt update; !sudo apt install -y pciutils; !pip install langchain-ollama; !curl -fsSL https://ollama.com/install.sh | sh - Run Ollama server: (Code provided in original article)

-

Pull OLMoE model:

!ollama pull sam860/olmoe-1b-7b-0924 - Prompt and interact with the model: (Code provided in original article, demonstrating summarization, logical reasoning, and coding tasks).

Examples of OLMoE's performance on various question types are included in the original article with screenshots.

Conclusion:

MoE models offer a significant advancement in AI efficiency. OLMoE, with its open-source nature and sparse architecture, exemplifies the potential of this approach. By carefully selecting and activating only the necessary experts, OLMoE achieves high performance while minimizing computational overhead, making advanced AI more accessible and cost-effective.

Frequently Asked Questions (FAQs): (The FAQs from the original article are included here.)

(Note: Image URLs remain unchanged from the original input.)

The above is the detailed content of OLMoE: Open Mixture-of-Experts Language Models. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undress AI Tool

Undress images for free

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

From Adoption To Advantage: 10 Trends Shaping Enterprise LLMs In 2025

Jun 20, 2025 am 11:13 AM

From Adoption To Advantage: 10 Trends Shaping Enterprise LLMs In 2025

Jun 20, 2025 am 11:13 AM

Here are ten compelling trends reshaping the enterprise AI landscape.Rising Financial Commitment to LLMsOrganizations are significantly increasing their investments in LLMs, with 72% expecting their spending to rise this year. Currently, nearly 40% a

AI Investor Stuck At A Standstill? 3 Strategic Paths To Buy, Build, Or Partner With AI Vendors

Jul 02, 2025 am 11:13 AM

AI Investor Stuck At A Standstill? 3 Strategic Paths To Buy, Build, Or Partner With AI Vendors

Jul 02, 2025 am 11:13 AM

Investing is booming, but capital alone isn’t enough. With valuations rising and distinctiveness fading, investors in AI-focused venture funds must make a key decision: Buy, build, or partner to gain an edge? Here’s how to evaluate each option—and pr

The Unstoppable Growth Of Generative AI (AI Outlook Part 1)

Jun 21, 2025 am 11:11 AM

The Unstoppable Growth Of Generative AI (AI Outlook Part 1)

Jun 21, 2025 am 11:11 AM

Disclosure: My company, Tirias Research, has consulted for IBM, Nvidia, and other companies mentioned in this article.Growth driversThe surge in generative AI adoption was more dramatic than even the most optimistic projections could predict. Then, a

These Startups Are Helping Businesses Show Up In AI Search Summaries

Jun 20, 2025 am 11:16 AM

These Startups Are Helping Businesses Show Up In AI Search Summaries

Jun 20, 2025 am 11:16 AM

Those days are numbered, thanks to AI. Search traffic for businesses like travel site Kayak and edtech company Chegg is declining, partly because 60% of searches on sites like Google aren’t resulting in users clicking any links, according to one stud

AGI And AI Superintelligence Are Going To Sharply Hit The Human Ceiling Assumption Barrier

Jul 04, 2025 am 11:10 AM

AGI And AI Superintelligence Are Going To Sharply Hit The Human Ceiling Assumption Barrier

Jul 04, 2025 am 11:10 AM

Let’s talk about it. This analysis of an innovative AI breakthrough is part of my ongoing Forbes column coverage on the latest in AI, including identifying and explaining various impactful AI complexities (see the link here). Heading Toward AGI And

Build Your First LLM Application: A Beginner's Tutorial

Jun 24, 2025 am 10:13 AM

Build Your First LLM Application: A Beginner's Tutorial

Jun 24, 2025 am 10:13 AM

Have you ever tried to build your own Large Language Model (LLM) application? Ever wondered how people are making their own LLM application to increase their productivity? LLM applications have proven to be useful in every aspect

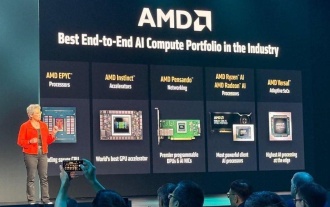

AMD Keeps Building Momentum In AI, With Plenty Of Work Still To Do

Jun 28, 2025 am 11:15 AM

AMD Keeps Building Momentum In AI, With Plenty Of Work Still To Do

Jun 28, 2025 am 11:15 AM

Overall, I think the event was important for showing how AMD is moving the ball down the field for customers and developers. Under Su, AMD’s M.O. is to have clear, ambitious plans and execute against them. Her “say/do” ratio is high. The company does

Future Forecasting A Massive Intelligence Explosion On The Path From AI To AGI

Jul 02, 2025 am 11:19 AM

Future Forecasting A Massive Intelligence Explosion On The Path From AI To AGI

Jul 02, 2025 am 11:19 AM

Let’s talk about it. This analysis of an innovative AI breakthrough is part of my ongoing Forbes column coverage on the latest in AI, including identifying and explaining various impactful AI complexities (see the link here). For those readers who h