Technology peripherals

Technology peripherals

AI

AI

OpenAI Shifts Focus With GPT-4.1, Prioritizes Coding And Cost Efficiency

OpenAI Shifts Focus With GPT-4.1, Prioritizes Coding And Cost Efficiency

OpenAI Shifts Focus With GPT-4.1, Prioritizes Coding And Cost Efficiency

Apr 16, 2025 am 11:37 AMThe release includes three distinct models, GPT-4.1, GPT-4.1 mini and GPT-4.1 nano, signaling a move toward task-specific optimizations within the large language model landscape. These models are not immediately replacing user-facing interfaces like ChatGPT but are positioned as tools for developers building applications and services.

For technology leaders and business decision makers, this release warrants attention. It indicates a strategic direction toward more specialized and potentially more cost-effective large language models optimized for enterprise functions, particularly software development complex data analysis and the creation of autonomous AI agents. The availability of tiered models and improved performance metrics could influence decisions around AI integration build-versus-buy strategies and allocating resources for internal development tools, potentially altering established development cycles.

Technically, the GPT-4.1 series represents an incremental but focused upgrade over its predecessor GPT-4o. A significant enhancement is the expansion of the context window to support up to 1 million tokens. This is a substantial increase from the 128000 token capacity of GPT-4o, allowing the models to process and maintain coherence across much larger volumes of information equivalent to roughly 750000 words. This capability directly addresses use cases involving the analysis of extensive codebases, the summarization of lengthy documents, or maintaining context in prolonged complex interactions necessary for sophisticated AI agents. The models operate with refreshed knowledge, incorporating information up to June 2024.

OpenAI reports improvements in core competencies relevant to developers. Internal benchmarks suggest GPT-4.1 shows a measurable improvement in coding tasks compared to both GPT-4o and the earlier GPT-4.5 preview model. Performance on benchmarks like SWE-bench, which measures the ability to resolve real-world software engineering issues, showed GPT-4.1 achieving a 55% success rate, according to OpenAI. The models are also trained to follow instructions more literally, which requires careful and specific prompting but allows for greater control over the output. The tiered structure offers flexibility: the standard GPT-4.1 provides the highest capability while the mini and nano versions offer balances between performance speed and reduced operational cost, with nano being positioned as the fastest and lowest-cost option suitable for tasks like classification or autocompletion.

In the broader market context, the GPT-4.1 release intensifies competition among leading AI labs. Providers like Google with its Gemini series and Anthropic with its Claude models have also introduced models boasting million-token context windows and strong coding capabilities.

This reflects an industry trend moving beyond general-purpose models toward variants optimized for specific high-value tasks often driven by enterprise demand. OpenAI’s partnership with Microsoft is evident with GPT-4.1 models being made available through Microsoft Azure OpenAI Service and integrated into developer tools like GitHub Copilot and GitHub Models. Concurrently, OpenAI announced plans to retire API access to its GPT-4.5 preview model by mid-July 2025, positioning the new 4.1 series as offering comparable or better performance at a lower cost.

OpenAI’s GPT-4.1 series introduces a significant reduction in API pricing compared to its predecessor, GPT-4o, making advanced AI capabilities more accessible to developers and enterprises.

This pricing strategy positions GPT-4.1 as a more cost-effective solution, offering up to 80% savings per query compared to GPT-4o, while also delivering enhanced performance and faster response times. The tiered model approach allows developers to select the appropriate balance between performance and cost, with GPT-4.1 Nano being ideal for tasks like classification or autocompletion, and the standard GPT-4.1 model suited for more complex applications.

From a strategic perspective, the GPT-4.1 family presents several implications for businesses. The improved coding and long-context capabilities could accelerate software development cycles, enabling developers to tackle more complex problems, analyze legacy code more effectively, or generate code documentation and tests more efficiently. The potential for building more sophisticated internal AI agents capable of handling multi-step tasks with access to large internal knowledge bases increases. Cost efficiency is another factor; OpenAI claims the 4.1 series operates at a lower cost than GPT-4.5 and has increased prompt caching discounts for users processing repetitive context. Furthermore, the upcoming availability of fine-tuning for the 4.1 and 4.1-mini models on platforms like Azure will allow organizations to customize these models using their own data for specific domain terminology workflows or brand voice, potentially offering a competitive advantage.

However, potential adopters should consider certain factors. The enhanced literalness in instruction-following means prompt engineering becomes even more critical, requiring clarity and precision to achieve desired outcomes. While the million-token context window is impressive, OpenAI’s data suggests that model accuracy can decrease when processing information at the extreme end of that scale, indicating a need for testing and validation for specific long-context use cases. Integrating and managing these API-based models effectively within existing enterprise architectures and security frameworks also requires careful planning and technical expertise.

This release from OpenAI underscores the rapid iteration cycles in the AI space, demanding continuous evaluation of model capabilities, cost structures and alignment with business objectives.

The above is the detailed content of OpenAI Shifts Focus With GPT-4.1, Prioritizes Coding And Cost Efficiency. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undress AI Tool

Undress images for free

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1794

1794

16

16

1740

1740

56

56

1590

1590

29

29

1468

1468

72

72

267

267

587

587

AI Investor Stuck At A Standstill? 3 Strategic Paths To Buy, Build, Or Partner With AI Vendors

Jul 02, 2025 am 11:13 AM

AI Investor Stuck At A Standstill? 3 Strategic Paths To Buy, Build, Or Partner With AI Vendors

Jul 02, 2025 am 11:13 AM

Investing is booming, but capital alone isn’t enough. With valuations rising and distinctiveness fading, investors in AI-focused venture funds must make a key decision: Buy, build, or partner to gain an edge? Here’s how to evaluate each option—and pr

AGI And AI Superintelligence Are Going To Sharply Hit The Human Ceiling Assumption Barrier

Jul 04, 2025 am 11:10 AM

AGI And AI Superintelligence Are Going To Sharply Hit The Human Ceiling Assumption Barrier

Jul 04, 2025 am 11:10 AM

Let’s talk about it. This analysis of an innovative AI breakthrough is part of my ongoing Forbes column coverage on the latest in AI, including identifying and explaining various impactful AI complexities (see the link here). Heading Toward AGI And

Kimi K2: The Most Powerful Open-Source Agentic Model

Jul 12, 2025 am 09:16 AM

Kimi K2: The Most Powerful Open-Source Agentic Model

Jul 12, 2025 am 09:16 AM

Remember the flood of open-source Chinese models that disrupted the GenAI industry earlier this year? While DeepSeek took most of the headlines, Kimi K1.5 was one of the prominent names in the list. And the model was quite cool.

Future Forecasting A Massive Intelligence Explosion On The Path From AI To AGI

Jul 02, 2025 am 11:19 AM

Future Forecasting A Massive Intelligence Explosion On The Path From AI To AGI

Jul 02, 2025 am 11:19 AM

Let’s talk about it. This analysis of an innovative AI breakthrough is part of my ongoing Forbes column coverage on the latest in AI, including identifying and explaining various impactful AI complexities (see the link here). For those readers who h

Grok 4 vs Claude 4: Which is Better?

Jul 12, 2025 am 09:37 AM

Grok 4 vs Claude 4: Which is Better?

Jul 12, 2025 am 09:37 AM

By mid-2025, the AI “arms race” is heating up, and xAI and Anthropic have both released their flagship models, Grok 4 and Claude 4. These two models are at opposite ends of the design philosophy and deployment platform, yet they

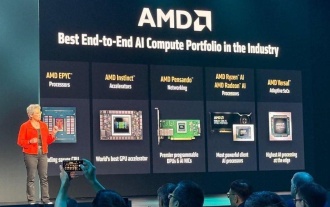

AMD Keeps Building Momentum In AI, With Plenty Of Work Still To Do

Jun 28, 2025 am 11:15 AM

AMD Keeps Building Momentum In AI, With Plenty Of Work Still To Do

Jun 28, 2025 am 11:15 AM

Overall, I think the event was important for showing how AMD is moving the ball down the field for customers and developers. Under Su, AMD’s M.O. is to have clear, ambitious plans and execute against them. Her “say/do” ratio is high. The company does

Chain Of Thought For Reasoning Models Might Not Work Out Long-Term

Jul 02, 2025 am 11:18 AM

Chain Of Thought For Reasoning Models Might Not Work Out Long-Term

Jul 02, 2025 am 11:18 AM

For example, if you ask a model a question like: “what does (X) person do at (X) company?” you may see a reasoning chain that looks something like this, assuming the system knows how to retrieve the necessary information:Locating details about the co

Batch Processing vs Mini-Batch Training in Deep Learning

Jun 30, 2025 am 09:46 AM

Batch Processing vs Mini-Batch Training in Deep Learning

Jun 30, 2025 am 09:46 AM

Deep learning has revolutionised the AI field by allowing machines to grasp more in-depth information within our data. Deep learning has been able to do this by replicating how our brain functions through the logic of neuron syna