Harness the Power of Fine-tuned LLMs with MonsterAPI: A Comprehensive Guide

Imagine a virtual assistant perfectly understanding and anticipating your needs. This is becoming reality thanks to advancements in Large Language Models (LLMs). However, achieving this level of personalization requires fine-tuning – the process of refining a general-purpose model for specific tasks. MonsterAPI simplifies this, making fine-tuning and evaluation efficient and accessible. This guide demonstrates how MonsterAPI helps refine and assess LLMs, transforming them into powerful tools tailored to your unique needs.

Key Learning Objectives:

- Master the complete fine-tuning and evaluation workflow using the MonsterAPI platform.

- Understand the critical role of evaluation in ensuring accuracy and coherence in LLM outputs.

- Gain practical experience with MonsterAPI's developer-friendly fine-tuning and evaluation APIs.

Table of Contents:

- The Evolution of Large Language Models

- Understanding LLM Fine-tuning

- The Importance of LLM Evaluation

- A Step-by-Step Guide to Fine-tuning and Evaluating LLMs with MonsterAPI

- Frequently Asked Questions

The Evolution of Large Language Models:

Recent years have witnessed remarkable progress in LLMs within the field of natural language processing. Numerous open-source and closed-source models are now available, empowering researchers and developers to push the boundaries of AI. While these models excel at general tasks, achieving peak accuracy and personalization for specific applications demands fine-tuning.

Fine-tuning adapts pre-trained models to domain-specific tasks using custom datasets. This process requires a dedicated dataset, model training, and ultimately, deployment. Crucially, thorough evaluation is necessary to gauge the model's effectiveness across various relevant tasks. MonsterAPI's llm_eval engine simplifies both fine-tuning and evaluation for developers and businesses. Its benefits include:

- Automated GPU environment configuration.

- Optimized memory usage for optimal batch size.

- Customizable model configurations for specific business needs.

- Model experiment tracking integration with Weights & Biases (WandB).

- An integrated evaluation engine for benchmarking model performance.

Understanding LLM Fine-tuning:

Fine-tuning tailors a pre-trained LLM to a specific task by training it on a custom dataset. This process leverages the pre-trained model's general knowledge while adapting it to the nuances of the new data. The process involves:

- Pre-trained Model Selection: Choose a suitable pre-trained model (e.g., Llama, SDXL, Claude, Gemma) based on your needs.

- Dataset Preparation: Gather, preprocess, and structure your custom dataset in an input-output format suitable for training.

- Model Training: Train the pre-trained model on your dataset, adjusting its parameters to learn patterns from the new data. MonsterAPI utilizes cost-effective and highly optimized GPUs to accelerate this process.

- Hyperparameter Tuning: Optimize hyperparameters (batch size, learning rate, epochs, etc.) for optimal performance.

- Evaluation: Assess the fine-tuned model's performance using metrics like MMLU, GSM8k, TruthfulQA, etc., to ensure it meets your requirements. MonsterAPI's integrated evaluation API simplifies this step.

The Importance of LLM Evaluation:

LLM evaluation rigorously assesses the performance and effectiveness of a fine-tuned model on its target task. This ensures the model achieves the desired accuracy, coherence, and consistency on a validation dataset. Metrics such as MMLU and GSM8k benchmark performance, highlighting areas for improvement. MonsterAPI's evaluation engine provides comprehensive reports to guide this process.

A Step-by-Step Guide to Fine-tuning and Evaluating LLMs with MonsterAPI:

MonsterAPI's LLM fine-tuner is significantly faster and more cost-effective than many alternatives. It supports various model types, including text generation, code generation, and image generation. This guide focuses on text generation. MonsterAPI utilizes a network of NVIDIA A100 GPUs with varying RAM capacities to accommodate different model sizes and hyperparameters.

| Platform/Service Provider | Model Name | Time Taken | Cost of Fine-tuning |

|---|---|---|---|

| MonsterAPI | Falcon-7B | 27m 26s | $5-6 |

| MonsterAPI | Llama-7B | 115 mins | $6 |

| MosaicML | MPT-7B-Instruct | 2.3 Hours | $37 |

| Valohai | Mistral-7B | 3 hours | $1.5 |

| Mistral | Mistral-7B | 2-3 hours | $4 |

Step 1: Setup and Installation:

Install necessary libraries and obtain your MonsterAPI key.

!pip install monsterapi==1.0.8

import os

from monsterapi import client as mclient

# ... (rest of the import statements)

os.environ['MONSTER_API_KEY'] = 'YOUR_MONSTER_API_KEY' # Replace with your key

client = mclient(api_key=os.environ.get("MONSTER_API_KEY"))

Step 2: Prepare and Launch the Fine-tuning Job:

Create a launch payload specifying the base model, LoRA parameters, dataset, and training settings.

launch_payload = {

"pretrainedmodel_config": {

"model_path": "huggyllama/llama-7b",

# ... (rest of the configuration)

},

"data_config": {

"data_path": "tatsu-lab/alpaca",

# ... (rest of the configuration)

},

"training_config": {

# ... (training parameters)

},

"logging_config": { "use_wandb": False }

}

ret = client.finetune(service="llm", params=launch_payload)

deployment_id = ret.get("deployment_id")

print(ret)

Step 3: Monitor Job Status and Logs:

status_ret = client.get_deployment_status(deployment_id) print(status_ret) logs_ret = client.get_deployment_logs(deployment_id) print(logs_ret)

Step 4: Evaluate the Fine-tuned Model:

Use the LLM evaluation API to assess performance.

url = "https://api.monsterapi.ai/v1/evaluation/llm"

payload = {

"eval_engine": "lm_eval",

"basemodel_path": base_model, # From launch_payload

"loramodel_path": lora_model_path, # From status_ret

"task": "mmlu"

}

# ... (rest of the evaluation code)

Conclusion:

Fine-tuning and evaluating LLMs are crucial for creating high-performing, task-specific models. MonsterAPI provides a streamlined and efficient platform for this process, offering comprehensive performance metrics and insights. By leveraging MonsterAPI, developers can confidently build and deploy custom LLMs tailored to their unique applications.

Frequently Asked Questions:

Q1: What are fine-tuning and evaluation of LLMs?

A1: Fine-tuning adapts a pre-trained LLM to a specific task using a custom dataset. Evaluation assesses the model's performance against benchmarks to ensure quality.

Q2: How does MonsterAPI aid in LLM fine-tuning?

A2: MonsterAPI provides hosted APIs for efficient and cost-effective LLM fine-tuning and evaluation, utilizing optimized computing resources.

Q3: What dataset types are supported?

A3: MonsterAPI supports various dataset types, including text, code, images, and videos, depending on the chosen base model.

The above is the detailed content of How to Fine-Tune Large Language Models with MonsterAPI. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undress AI Tool

Undress images for free

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

From Adoption To Advantage: 10 Trends Shaping Enterprise LLMs In 2025

Jun 20, 2025 am 11:13 AM

From Adoption To Advantage: 10 Trends Shaping Enterprise LLMs In 2025

Jun 20, 2025 am 11:13 AM

Here are ten compelling trends reshaping the enterprise AI landscape.Rising Financial Commitment to LLMsOrganizations are significantly increasing their investments in LLMs, with 72% expecting their spending to rise this year. Currently, nearly 40% a

AI Investor Stuck At A Standstill? 3 Strategic Paths To Buy, Build, Or Partner With AI Vendors

Jul 02, 2025 am 11:13 AM

AI Investor Stuck At A Standstill? 3 Strategic Paths To Buy, Build, Or Partner With AI Vendors

Jul 02, 2025 am 11:13 AM

Investing is booming, but capital alone isn’t enough. With valuations rising and distinctiveness fading, investors in AI-focused venture funds must make a key decision: Buy, build, or partner to gain an edge? Here’s how to evaluate each option—and pr

The Unstoppable Growth Of Generative AI (AI Outlook Part 1)

Jun 21, 2025 am 11:11 AM

The Unstoppable Growth Of Generative AI (AI Outlook Part 1)

Jun 21, 2025 am 11:11 AM

Disclosure: My company, Tirias Research, has consulted for IBM, Nvidia, and other companies mentioned in this article.Growth driversThe surge in generative AI adoption was more dramatic than even the most optimistic projections could predict. Then, a

These Startups Are Helping Businesses Show Up In AI Search Summaries

Jun 20, 2025 am 11:16 AM

These Startups Are Helping Businesses Show Up In AI Search Summaries

Jun 20, 2025 am 11:16 AM

Those days are numbered, thanks to AI. Search traffic for businesses like travel site Kayak and edtech company Chegg is declining, partly because 60% of searches on sites like Google aren’t resulting in users clicking any links, according to one stud

AGI And AI Superintelligence Are Going To Sharply Hit The Human Ceiling Assumption Barrier

Jul 04, 2025 am 11:10 AM

AGI And AI Superintelligence Are Going To Sharply Hit The Human Ceiling Assumption Barrier

Jul 04, 2025 am 11:10 AM

Let’s talk about it. This analysis of an innovative AI breakthrough is part of my ongoing Forbes column coverage on the latest in AI, including identifying and explaining various impactful AI complexities (see the link here). Heading Toward AGI And

Build Your First LLM Application: A Beginner's Tutorial

Jun 24, 2025 am 10:13 AM

Build Your First LLM Application: A Beginner's Tutorial

Jun 24, 2025 am 10:13 AM

Have you ever tried to build your own Large Language Model (LLM) application? Ever wondered how people are making their own LLM application to increase their productivity? LLM applications have proven to be useful in every aspect

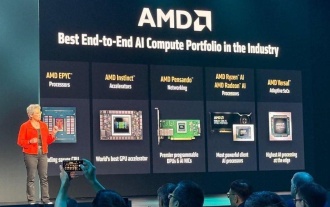

AMD Keeps Building Momentum In AI, With Plenty Of Work Still To Do

Jun 28, 2025 am 11:15 AM

AMD Keeps Building Momentum In AI, With Plenty Of Work Still To Do

Jun 28, 2025 am 11:15 AM

Overall, I think the event was important for showing how AMD is moving the ball down the field for customers and developers. Under Su, AMD’s M.O. is to have clear, ambitious plans and execute against them. Her “say/do” ratio is high. The company does

Future Forecasting A Massive Intelligence Explosion On The Path From AI To AGI

Jul 02, 2025 am 11:19 AM

Future Forecasting A Massive Intelligence Explosion On The Path From AI To AGI

Jul 02, 2025 am 11:19 AM

Let’s talk about it. This analysis of an innovative AI breakthrough is part of my ongoing Forbes column coverage on the latest in AI, including identifying and explaining various impactful AI complexities (see the link here). For those readers who h