Introduction

In the era of digital transformation, system uptime and continuous availability are crucial to enterprises in all walks of life. High availability (HA) clustering has become a key strategy to ensure that services remain accessible, ensuring that services are not interrupted even in the face of hardware or software failures. With its powerful robustness and flexibility, Linux has become the ideal platform for deploying HA solutions. This article explores the concept of Linux high availability clusters in depth, and explores its mechanisms, technologies and their key roles in building resilient and fault-tolerant systems.

Cluster Concept

Fundamentally, a cluster is a collection of interconnected computers that work together as a single system to provide a higher level of availability, reliability, and scalability. Unlike standalone servers, clusters are designed to seamlessly manage failures and ensure that services are not interrupted. Clusters are mainly divided into two types: active-active and active-passive.

- Active-active clusterInvolves multiple nodes to process requests simultaneously. This not only provides redundancy, but also enhances the performance of the system by allocating the load.

- Active-passive cluster is composed of active nodes and backup nodes, where backup nodes only work if the active node fails.

Companies of Linux HA clusters typically include hardware nodes, networks, storage, cluster software, and applications configured to run on the cluster.

Key technologies and tools in Linux HA cluster

Linux HA clusters utilize multiple tools and technologies to ensure system availability:

- Pacemaker: An open source cluster resource manager that handles the allocation of resources (such as virtual IP, web server, and database) based on predefined policies to deal with node or resource failures.

- Corosync: Provides a messaging layer for Linux cluster solutions, ensuring that all nodes in the cluster maintain continuous communication and understand each other's status.

- DRBD (Distributed Replication Block Device): Promote real-time replication of data across storage devices and ensure data redundancy.

- Linux Virtual Server (LVS): Manage load balancing and provide scalability between cluster server nodes.

Architecture of Linux HA cluster

The architecture of HA clusters in Linux environments may vary by demand, but usually contains several key components:

- Node: A single server that works together to provide services.

- Shared Storage: Allowing data to be accessed across clusters is essential to keeping the service consistent.

- Virtual IP Address: Used to provide a failover mechanism at the network level.

- Cluster Services: Software applications and services configured to run on the cluster.

Nodes communicate with each other using heartbeat signals sent through Corosync to ensure that all nodes are continuously monitored. If one node fails, Pacemaker reassigns its tasks to another node, minimizing downtime.

Set up Linux HA cluster

To set up a Linux HA cluster, you must follow the following steps:

- Install the necessary software: Install and configure Pacemaker, Corosync and other necessary tools on all nodes.

- Configure node: Define and configure the role of a node, including what services each node will handle.

- Create cluster resources: Set up resources managed by the cluster, such as virtual IP, services, and applications.

- Test cluster: Simulate failures to ensure the cluster responds correctly and that the service can continue to run without interruption.

Practical Application

Linux HA clusters are widely used in industries such as finance, medical care and telecommunications, where system downtime is directly converted into revenue loss and operational risks. For example, financial institutions use HA clusters to ensure that their trading platforms and transaction processing systems are always in operation, thus ensuring ongoing service availability to customers.

Challenges and Considerations

Deploying an HA cluster is not without its challenges. It requires careful planning of system resources, network configuration and security. Performance tuning and load balancing also require careful attention to prevent any node from becoming a bottleneck. In addition, ensuring data consistency between nodes and handling "split brain" scenarios are key issues that need to be solved through proper cluster configuration and regular monitoring.

Advanced Themes and Trends

Integrating container technology with HA clusters is gaining attention. Tools like Kubernetes now often work with traditional HA setups for increased flexibility and scalability. Furthermore, advances in artificial intelligence and machine learning are beginning to play a role in predictive failure analysis, which could revolutionize the way clusters handle and prevent operational problems.

Conclusion

Linux high availability clustering is a cornerstone technology for enterprises to achieve near-zero downtime. As enterprises continue to demand higher levels of service availability and data integrity, the importance of mastering HA cluster technology will only increase. Adopting these systems not only supports business continuity, but also provides a competitive advantage in today's fast-paced market.

The above is the detailed content of How to Build Resilience with Linux High Availability Clustering. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undress AI Tool

Undress images for free

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Hot Topics

1794

1794

16

16

1739

1739

56

56

1590

1590

29

29

1468

1468

72

72

267

267

587

587

Install LXC (Linux Containers) in RHEL, Rocky & AlmaLinux

Jul 05, 2025 am 09:25 AM

Install LXC (Linux Containers) in RHEL, Rocky & AlmaLinux

Jul 05, 2025 am 09:25 AM

LXD is described as the next-generation container and virtual machine manager that offers an immersive for Linux systems running inside containers or as virtual machines. It provides images for an inordinate number of Linux distributions with support

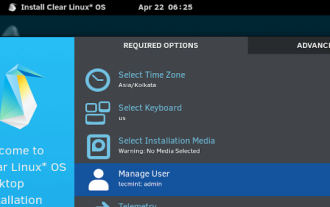

Clear Linux Distro - Optimized for Performance and Security

Jul 02, 2025 am 09:49 AM

Clear Linux Distro - Optimized for Performance and Security

Jul 02, 2025 am 09:49 AM

Clear Linux OS is the ideal operating system for people – ahem system admins – who want to have a minimal, secure, and reliable Linux distribution. It is optimized for the Intel architecture, which means that running Clear Linux OS on AMD sys

7 Ways to Speed Up Firefox Browser in Linux Desktop

Jul 04, 2025 am 09:18 AM

7 Ways to Speed Up Firefox Browser in Linux Desktop

Jul 04, 2025 am 09:18 AM

Firefox browser is the default browser for most modern Linux distributions such as Ubuntu, Mint, and Fedora. Initially, its performance might be impressive, however, with the passage of time, you might notice that your browser is not as fast and resp

How to create a self-signed SSL certificate using OpenSSL?

Jul 03, 2025 am 12:30 AM

How to create a self-signed SSL certificate using OpenSSL?

Jul 03, 2025 am 12:30 AM

The key steps for creating a self-signed SSL certificate are as follows: 1. Generate the private key, use the command opensslgenrsa-outselfsigned.key2048 to generate a 2048-bit RSA private key file, optional parameter -aes256 to achieve password protection; 2. Create a certificate request (CSR), run opensslreq-new-keyselfsigned.key-outselfsigned.csr and fill in the relevant information, especially the "CommonName" field; 3. Generate the certificate by self-signed, and use opensslx509-req-days365-inselfsigned.csr-signk

How to extract a .tar.gz or .zip file?

Jul 02, 2025 am 12:52 AM

How to extract a .tar.gz or .zip file?

Jul 02, 2025 am 12:52 AM

Decompress the .zip file on Windows, you can right-click to select "Extract All", while the .tar.gz file needs to use tools such as 7-Zip or WinRAR; on macOS and Linux, the .zip file can be double-clicked or unzip commanded, and the .tar.gz file can be decompressed by tar command or double-clicked directly. The specific steps are: 1. Windows processing.zip file: right-click → "Extract All"; 2. Windows processing.tar.gz file: Install third-party tools → right-click to decompress; 3. macOS/Linux processing.zip file: double-click or run unzipfilename.zip; 4. macOS/Linux processing.tar

How would you debug a server that is slow or has high memory usage?

Jul 06, 2025 am 12:02 AM

How would you debug a server that is slow or has high memory usage?

Jul 06, 2025 am 12:02 AM

If you find that the server is running slowly or the memory usage is too high, you should check the cause before operating. First, you need to check the system resource usage, use top, htop, free-h, iostat, ss-antp and other commands to check CPU, memory, disk I/O and network connections; secondly, analyze specific process problems, and track the behavior of high-occupancy processes through tools such as ps, jstack, strace; then check logs and monitoring data, view OOM records, exception requests, slow queries and other clues; finally, targeted processing is carried out based on common reasons such as memory leaks, connection pool exhaustion, cache failure storms, and timing task conflicts, optimize code logic, set up a timeout retry mechanism, add current limit fuses, and regularly pressure measurement and evaluation resources.

How to Burn CD/DVD in Linux Using Brasero

Jul 05, 2025 am 09:26 AM

How to Burn CD/DVD in Linux Using Brasero

Jul 05, 2025 am 09:26 AM

Frankly speaking, I cannot recall the last time I used a PC with a CD/DVD drive. This is thanks to the ever-evolving tech industry which has seen optical disks replaced by USB drives and other smaller and compact storage media that offer more storage

How to troubleshoot DNS issues on a Linux machine?

Jul 07, 2025 am 12:35 AM

How to troubleshoot DNS issues on a Linux machine?

Jul 07, 2025 am 12:35 AM

When encountering DNS problems, first check the /etc/resolv.conf file to see if the correct nameserver is configured; secondly, you can manually add public DNS such as 8.8.8.8 for testing; then use nslookup and dig commands to verify whether DNS resolution is normal. If these tools are not installed, you can first install the dnsutils or bind-utils package; then check the systemd-resolved service status and configuration file /etc/systemd/resolved.conf, and set DNS and FallbackDNS as needed and restart the service; finally check the network interface status and firewall rules, confirm that port 53 is not