How to optimize LIMIT with a large offset for pagination?

Jun 17, 2025 am 09:40 AMUsing LIMIT and OFFSET for deep paging results in performance degradation because the database needs to be scanned and skipped a large number of records. 1. Use cursor-based paging to obtain the next page data by remembering the sorting field (such as ID or timestamp) of the last record of the previous page to avoid scanning all previous rows; 2. Ensure that the sorting field has indexes, such as single or combined indexes, to speed up positioning records; 3. Constrain business restrictions on deep paging, such as setting the maximum page number, guiding users to filter, or asynchronously loading cache results. These methods can effectively improve the performance of paging query, especially in large data scenarios, cursor paging combined with index optimization is the most recommended method.

When you are using LIMIT and OFFSET for paging queries, especially when OFFSET is very large, performance tends to drop sharply. The database needs to scan and skip the previous large number of records to get the part of the data you really want. This problem is particularly evident when implementing deep paging (such as after page 1000).

To optimize LIMIT queries with large offsets, the key is to reduce unnecessary scans and index traversals.

1. Use cursor-based pagination instead of OFFSET pagination

The traditional LIMIT offset, size method is inefficient when the offset is large, because it requires scanning all previous rows. A more efficient alternative is Cursor-based Pagination .

- Principle: Instead of remembering which page is currently on, remembering the sorting field (such as ID, timestamp, etc.) of the last record on the previous page, and then the next page starts from that location.

- Sample SQL:

SELECT id, name FROM users WHERE id > 12345 ORDER BY id ASC LIMIT 20;

- Advantages: It won't slow down as the number of pages increases, because only the data you need is always scanned.

- Disadvantages: It cannot jump to a certain page directly, and it is not suitable for frequent insertion/deletion.

Note: If you are using timestamps as cursors, you should pay attention to the problem of repetition of time. It is best to use it with unique fields (such as primary keys).

2. Add index to the sorted field

Whether it is traditional paging or cursor paging, it is the basic premise to ensure that there is a suitable index on the sorting field .

- If you sort by

created_at, index it. - If you often combine the order (such as by state first and by time), consider combining indexes.

Indexing allows the database to quickly locate the location you want, rather than scanning the full table.

Common practices:

- Single field index:

CREATE INDEX idx_created_at ON table(created_at); - Multi-field index:

CREATE INDEX idx_status_created ON table(status, created_at);

In this way, even if you use OFFSET , you can try to use the index to speed up skipping previous records.

3. Limit or downgrade the "deep pagination"

In some scenarios, users do not actually turn to hundreds of pages, but the system still supports it. You can consider this at this time:

- Limit the maximum page number : For example, only page 100 is allowed to be turned to at most, and the prompt is "Please adjust the filter conditions" after it exceeds it.

- Asynchronous loading cache : For background analysis pages, the results can be cached or generated asynchronously.

- Full-text search or filtering alternative paging : guides users to narrow the scope through search and filter, rather than relying on page turning to find content.

Although these strategies do not solve technical problems, they can effectively alleviate the performance pressure in actual use.

Basically these are the methods.

Cursor paging is the most recommended approach, especially when the data volume is large; indexing is the basic guarantee; restrictions on business operations of deep paging are also a practical idea.

The key to optimizing such problems is not to bypass paging, but to understand how users use and how data can be used, and then choose the right strategy.

The above is the detailed content of How to optimize LIMIT with a large offset for pagination?. For more information, please follow other related articles on the PHP Chinese website!

Hot AI Tools

Undress AI Tool

Undress images for free

Undresser.AI Undress

AI-powered app for creating realistic nude photos

AI Clothes Remover

Online AI tool for removing clothes from photos.

Clothoff.io

AI clothes remover

Video Face Swap

Swap faces in any video effortlessly with our completely free AI face swap tool!

Hot Article

Hot Tools

Notepad++7.3.1

Easy-to-use and free code editor

SublimeText3 Chinese version

Chinese version, very easy to use

Zend Studio 13.0.1

Powerful PHP integrated development environment

Dreamweaver CS6

Visual web development tools

SublimeText3 Mac version

God-level code editing software (SublimeText3)

Detailed explanation of how to use take and limit in Laravel

Mar 10, 2024 pm 05:51 PM

Detailed explanation of how to use take and limit in Laravel

Mar 10, 2024 pm 05:51 PM

"Detailed explanation of how to use take and limit in Laravel" In Laravel, take and limit are two commonly used methods, used to limit the number of records returned in database queries. Although their functions are similar, there are some subtle differences in specific usage scenarios. This article will analyze the usage of these two methods in detail and provide specific code examples. 1. Take method In Laravel, the take method is used to limit the number of records returned, usually combined with the orderBy method.

C++ program optimization: time complexity reduction techniques

Jun 01, 2024 am 11:19 AM

C++ program optimization: time complexity reduction techniques

Jun 01, 2024 am 11:19 AM

Time complexity measures the execution time of an algorithm relative to the size of the input. Tips for reducing the time complexity of C++ programs include: choosing appropriate containers (such as vector, list) to optimize data storage and management. Utilize efficient algorithms such as quick sort to reduce computation time. Eliminate multiple operations to reduce double counting. Use conditional branches to avoid unnecessary calculations. Optimize linear search by using faster algorithms such as binary search.

In-depth interpretation: Why is Laravel as slow as a snail?

Mar 07, 2024 am 09:54 AM

In-depth interpretation: Why is Laravel as slow as a snail?

Mar 07, 2024 am 09:54 AM

Laravel is a popular PHP development framework, but it is sometimes criticized for being as slow as a snail. What exactly causes Laravel's unsatisfactory speed? This article will provide an in-depth explanation of the reasons why Laravel is as slow as a snail from multiple aspects, and combine it with specific code examples to help readers gain a deeper understanding of this problem. 1. ORM query performance issues In Laravel, ORM (Object Relational Mapping) is a very powerful feature that allows

Decoding Laravel performance bottlenecks: Optimization techniques fully revealed!

Mar 06, 2024 pm 02:33 PM

Decoding Laravel performance bottlenecks: Optimization techniques fully revealed!

Mar 06, 2024 pm 02:33 PM

Decoding Laravel performance bottlenecks: Optimization techniques fully revealed! Laravel, as a popular PHP framework, provides developers with rich functions and a convenient development experience. However, as the size of the project increases and the number of visits increases, we may face the challenge of performance bottlenecks. This article will delve into Laravel performance optimization techniques to help developers discover and solve potential performance problems. 1. Database query optimization using Eloquent delayed loading When using Eloquent to query the database, avoid

What are some ways to resolve inefficiencies in PHP functions?

May 02, 2024 pm 01:48 PM

What are some ways to resolve inefficiencies in PHP functions?

May 02, 2024 pm 01:48 PM

Five ways to optimize PHP function efficiency: avoid unnecessary copying of variables. Use references to avoid variable copying. Avoid repeated function calls. Inline simple functions. Optimizing loops using arrays.

Laravel performance bottleneck revealed: optimization solution revealed!

Mar 07, 2024 pm 01:30 PM

Laravel performance bottleneck revealed: optimization solution revealed!

Mar 07, 2024 pm 01:30 PM

Laravel performance bottleneck revealed: optimization solution revealed! With the development of Internet technology, the performance optimization of websites and applications has become increasingly important. As a popular PHP framework, Laravel may face performance bottlenecks during the development process. This article will explore the performance problems that Laravel applications may encounter, and provide some optimization solutions and specific code examples so that developers can better solve these problems. 1. Database query optimization Database query is one of the common performance bottlenecks in Web applications. exist

Discussion on Golang's gc optimization strategy

Mar 06, 2024 pm 02:39 PM

Discussion on Golang's gc optimization strategy

Mar 06, 2024 pm 02:39 PM

Golang's garbage collection (GC) has always been a hot topic among developers. As a fast programming language, Golang's built-in garbage collector can manage memory very well, but as the size of the program increases, some performance problems sometimes occur. This article will explore Golang’s GC optimization strategies and provide some specific code examples. Garbage collection in Golang Golang's garbage collector is based on concurrent mark-sweep (concurrentmark-s

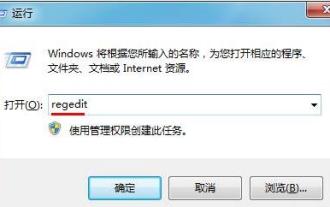

How to optimize the startup items of WIN7 system

Mar 26, 2024 pm 06:20 PM

How to optimize the startup items of WIN7 system

Mar 26, 2024 pm 06:20 PM

1. Press the key combination (win key + R) on the desktop to open the run window, then enter [regedit] and press Enter to confirm. 2. After opening the Registry Editor, we click to expand [HKEY_CURRENT_USERSoftwareMicrosoftWindowsCurrentVersionExplorer], and then see if there is a Serialize item in the directory. If not, we can right-click Explorer, create a new item, and name it Serialize. 3. Then click Serialize, then right-click the blank space in the right pane, create a new DWORD (32) bit value, and name it Star